What is Understandability?

What is Understandability?

Understandability is speaking to be understood, and listening to understand.

In our world, understandability is the degree to which the speaker’s message is understood by the listener. It is a measure of communication ability. It is the oral and auditory engine that makes the world go around. Without understandability, we might as well all be talking into thin air whilst wearing earmuffs.

In its simplest form, a successful dialogue indicates sufficient understandability on the part of both the speaker and the listener. The meaning and message of both parties is communicated efficiently and without frustration.

Understandability is one of those concepts that everyone can relate to and yet, takes for granted. Everyone “knows” when they understand what they hear. However, understanding what the component parts of understandability are and how they work together to reach the required level is a complex story. That story is where this paper begins.

The Measure that Matters

What then, is the difference between simply “the ability to speak English” and understandability? If I want to know if my prospective hire has the ability to communicate and be understood, why wouldn’t I just ask him or her to take an English test? The answer to this is important to your understanding of understandability.

English tests measure many things, and these things are often familiar. Typically, the results of an English test provide information on components such as vocabulary, pronunciation, rhythm, fluency, accuracy, intonation, grammar, comprehension and much more.

This type of breakout has a long tradition in academic testing, the type of testing used to determine admissions into secondary and higher education institutions. We refer to this type of measurement as measuring “academic knowledge” about the language. While this may be useful for the purpose of academic admissions, it is not what you are interested in when you are deciding if your prospective hire will be understood by your customers.

Understandability is the measure that matters — when we are qualifying

people who will be speaking with your customers.

We also use the term language power to describe the ability to use a language, the ability to communicate meaning in the ears of the listener. Understandability derives from language power. Interestingly, language power and academic knowledge of language often do not correlate. Examples of this abound. Think of students in Asia who are very book smart and have studied for years to pass the English language exams necessary to enter university in the West. The students often receive very high scores and are admitted to universities in the West. Unfortunately, far too many of them struggle at university because they do not have sufficient language power and their speech lacks understandability. This causes frustration and many challenges in communication.

Conversely, the world is full of examples of immigrants who have mastered their new country’s language by experience and by living it. They need to find work and they manage to develop enough language power to be understood. They achieve the required level of understandability for their occupation. And yet, they may not possess academic knowledge about the language, or of its underlying structure and grammar. These people may not achieve passing scores on typical English tests. The reason is clear, English tests do not measure language power nor understandability.

Understandability is Specific

Let us consider the immigrant who has mastered enough of the language to obtain employment. He or she has mastered the particular vocabulary, usage, phrases, and terminology for this particular job. This is a subset of the English language. It is specific to how English is used in this part of the world and it contains specific words and constructs that have meaning and are important in this industry and to the particular business they were hired by.

It is important to note that this employee’s success does not imply anything about the new hire’s language ability. It does not mean that he or she has sufficient language power or understandability to work in another industry, in another part of the world for a different type of business.

Language power and understandability are measurements that are specific to geography, industry and to a particular business.

Measurement Challenges

What does it take to achieve understandability? Is certain speech too fast to be understood? Is certain speech too slow to be understood? Are certain accents too heavy to be understood? Are certain mispronunciations going to make speech impossible to understand? Is the use of certain vocabulary words essential to being understood in a specific industry for specific business? The answers to these questions are complex. This complexity is what makes it impossible for traditional academic English tests to measure understandability.

The reasons are interesting.

Words correct per minute (WCPM) is a traditional measure of fluency in academic testing. Very often, cutoffs are determined to say that speech slower than X WCPM is failing as well as speech faster than Y WCPM is also failing.

The weakness in this logic is that slow speech can very often be perfectly understandable because the slowness is due to the speaker pausing in the correct places.

These correctly placed pauses often enhance the understandability of the speech. Conversely unwarranted and misplaced pauses decrease understandability. Similarly, fast speech can also be well understood when it is articulated clearly and still contains pauses in the right places which enhance the message and understandability.

In like manner, how much of an accent is too much? At what point does the speaker’s accent make the speech too difficult to understand and cause the listener frustration. If you were to ask a typical online consumer, “How much of an accent are you willing to accept on the part of your customer service agent?” the customer does not have a measuring scale for accent, he or she simply “knows” when they do not understand someone speaking to them. Additionally, the concept of accent itself is quite complex.

This is what traditional tests are not able to measure. To measure what the online consumer “knows” requires us to transform human intelligence into machine intelligence.

Enter AI – Artificial Intelligence

You have heard a great deal about artificial intelligence. It has impressive capabilities; however, it is often overhyped. Let us focus on the part that is real, measurable and relevant to creating a way to improve the way you identify prospective customer service agents.

For illustration purposes we will focus on the North American consumer. Naturally this is a broad market, however, we will use this demographic as our target which will be supported by an offshore customer service agent from Asia.

The problem we are trying to solve occurs when the North American consumer says phrases such as, “I didn’t understand you, could you say that again. Could you repeat that please. What did you say, etc.” The objective of the system is to prevent these events from happening. The North American consumer has no problem determining who he or she does and does not understand. Thousands of calls are switched over to supervisors for this lack of understandability.

Labels and Features

The North American consumer has “human intelligence” that allows him or her to quickly assess the understandability of the customer service agent.

If we asked the North American consumer to listen to any number of calls, they could classify the speaker’s understandability into three categories, for argument’s sake.

These categories could be:

a) Unacceptable

b) Acceptable

c) Superior

In the world of artificial intelligence these categories would be known as “Labels”. So thus far, we know that our North American consumers can instinctively label the speech that they hear from a customer service agent into these three categories. This helps us teach the machine what it needs to know.

The other part of the artificial intelligence equation is known as “Features”. This is a bit trickier but we will explain. The North American consumer is able to label speech that he or she hears, regardless of what the speech is about. The agent could be reading off the items from an invoice or the agent could be describing a new promotion that is available. Regardless of the content of the speech, the North American consumer is able to label it. The reason for this, is in the very nature of speech itself.

In nature, speech is a signal that is comprised of many components. Some of the components are related to the pitch, tone and frequency, while others are related to the timing and duration of vowels, consonants and pauses. And each of these types of components is the sum of many of their subcomponents that use very precise measurements in milliseconds and kilohertz. This large and broad array of components and subcomponents that comprise the speech signal is known as features.

Part of our work as scientists is to look for features in the speech that we are wanting to categorize or label. The concept of feature extraction is central to artificial intelligence. We have scientists involved in digital signal processing who are continually developing new and unique ways to extract more and more features from speech signals. The more relevant features that we can extract from speech, the more we can transform human intelligence into machine intelligence. We will explain…

Building Models

We’ve mentioned that the North American consumer can label the understandability of speech, regardless of what the speech is about. Therefore, our working hypothesis is that speech that is understandable, regardless of the topic, has a set of feature measures that are different from speech that is not understandable, regardless of the topic. Our goal therefore is to extract the relevant features so that we can “build a model” of understandable speech.

Think about a typical response to a customer question, such as “Where is my order?” The response from the agent might contain a few sentences. We can use our feature extraction techniques to identify the low-level components and subcomponents of this speech. And each of these elements is measurable in either counting units, kilohertz, milliseconds or other yardsticks.

We can perform this feature extraction for thousands of such audios in order to create a large data set of features. Are you with me so far? At the moment, we know we can break apart speech into components and subcomponents known as features, and that North American consumers can label speech into categories known as labels. Let’s put it all together.

Let’s assume we have collected 1000 samples of speech from Asian customer service agents, and that within this sample is some distribution of acceptable, unacceptable and superior speech. Enter the concept of human rating. We mentioned we need to transform human intelligence into machine intelligence. To capture the human intelligence, we create a rubric and train human raters to categorize the speech samples into the three labels, unacceptable, acceptable and superior. We might use half a dozen raters who will each rate the 1000 samples. This will allow us to examine the disagreements between the human raters, which is known as interrater error. Many of the samples may need to be reviewed by a subgroup of the raters in order to reach a final consensus as to the category they belong to. So now, we have a thousand samples and they have been labeled by humans. And, of course, these humans are representing the North American consumer and should be of that demographic. Now we have captured our human intelligence!

At the same time, on the machine side we are busy extracting features, those components and subcomponents that make up the speech signal and that also capture elements such as how well the speaker can answer a question or express their opinions. Is their response on topic, or are they repeating a prayer because they are at a loss as to how they should respond? All of these elements can be broken down into the features we need. And these features will help us categorize the speaker’s understandability.

The science of artificial intelligence has developed tools that can be put to work on large data sets of features. Essentially, these tools are looking for patterns. Very often, these patterns would never be visible to the naked eye. As well, the patterns may be counterintuitive and discovered by the tool because the tool has no implicit biases. Think of the artificial intelligence tool as going back and forth across all of the variety and complexity of the features in the speech data set in an effort to paint a multidimensional picture of what this data set looks like!

At LanguaMetrics, we call this multidimensional picture, the LanguaVector. Consider the 3-D view of the earth shown below:

The LanguaVector can be envisioned as the collection of features used to measure understandability.

Pitches and frequencies can be thought of as mountain ranges which vary in their location, altitude and topography. Individual metrics on vowels and consonants can be thought of as various cities around the world, while timing related metrics such as pauses and runs can be envisioned as the flowing rivers and oceans around the world. It is a complex picture which requires advanced AI tools to model.

We are almost there. As you can see the from the illustration above, the LanguaVector is a complex multidimensional representation of what understandability “looks like”. In order to arrive at our goal of transforming human intelligence into machine intelligence we have one more step. The key element in this step lies in our ability to compare speech from one of our tests to our AI models of understandability. While the multidimensional illustration of understandability appears complex, fortunately we are able to model it mathematically with precision. This becomes our standard and we measure speaker’s test responses against our standard.

We then build a scoring mechanism based on measuring how close the speaker’s test responses are to our AI models of understandability. This enables us to further refine our measurement by setting as many thresholds as we desire in order to make use of the understandability scores.

As we’ve mentioned, understandability is specific to geographical region, industry and business, the role of the speaker and the demographic of the listener. By using artificial intelligence, we create precise mathematical models to serve as our standards for understandability. We measure our speaker’s test responses against our AI models to see if they reach the level of understandability that our clients have deemed necessary for their customers.

That completes the journey to understand how we are able to transform human intelligence into machine intelligence so that you can confidently hire speakers that will be understood by your customers. We’ve explained the concept of how we do this, but we’d also like to help make you a smart shopper. How can you know if an AI system is good? As we’ve mentioned, AI is all the rage but it’s not every day that you hear people talking about how to quantify how good an AI system is. Let us take a look.

How Good is an AI System – an Executive’s Guide

There is no question that AI systems are complex. They are created by data scientists and engineers who have had many years of specialized training. The problem remains then, how should a business executive determine if an AI system is good for their business, is as good as the vendor says it is, and how it compares to other systems?

AI relies a great deal on the science of probability. Unfortunately, this makes it harder to answer the question of a system’s accuracy. AI systems make decisions on how likely something is to be classified in a certain way. For example, in the models we have described we are measuring how close the speaker’s test results are to our AI models of understandability. In fact, we are measuring probabilities.

Going back to our models, let us say that we have a score of one through 100 to tell us how the understandability of our speaker’s test results compare with our AI models for understandability. And we create thresholds in which 70 and below is categorized as unacceptable, 71 through 90 is categorized as acceptable, and 91 through 100 is categorized as superior. Since AI is based on probabilities, there is a certain probability that a given speaker is correctly placed into his category. “Correct” meaning, that the machine labeled the speaker the same way the human rater would have labeled the speaker.

Sometimes we may prefer to err in one direction over another. For example, we may be more concerned about maintaining high understandability, than we are interested in finding as many prospective hires as possible. Or in other circumstances our priorities might be reversed. Understanding how your system works is critical in making it perform in the manner that is most valuable to you.

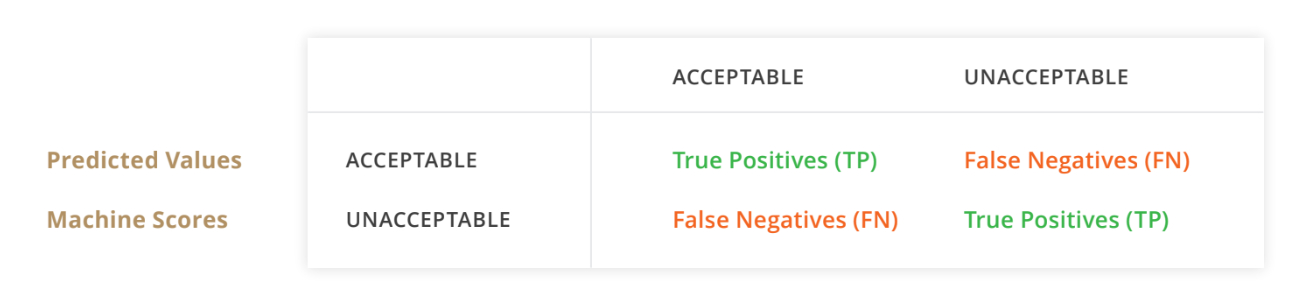

Interestingly, the name of the device used to measure how good an AI system is, is known as the “confusion matrix”. The confusion matrix is then used to produce the three metrics that describe an AI system like the one we have been describing, used to classify prospective agents. For an executive’s needs, the three important metrics are known as precision, recall, and F-measure. The cells of the confusion matrix serve as the input parameters to these three important attributes.

Sample Confusion Matrix: Test Results Rated by Humans

The Three Metrics of an AI System

You do not need to understand the details of how these metrics are calculated in order to be a smart shopper when it comes to AI systems. What is important is understanding what the metric tells us so that we can focus on what will help improve your business.

The Basics

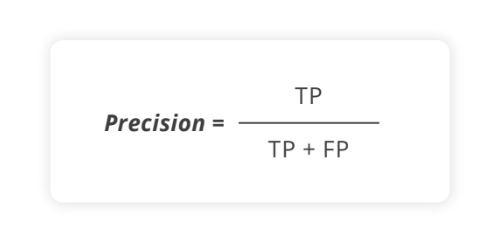

Precision – is the more qualitative measure of an AI system. As its name implies it is about how precise the system is in making its determination. It is a measure of how good the system is at selecting the results that meet your criteria. It takes data from the confusion matrix and uses the following formula:

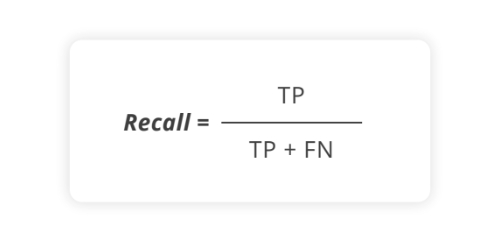

Mr. Executive, if you are more worried about the possibility of a poor speaker joining your project than you are about the possibility of missing out on finding a good speaker, then the metric that matters to you most is known as precision. Conversely, if market demands are pressuring you to find every possible good speaker, and this is more important than small amounts of poor speaker’s getting through, then the metric that matters to you is known as recall. Recall takes data from the confusion matrix and uses the following formula:

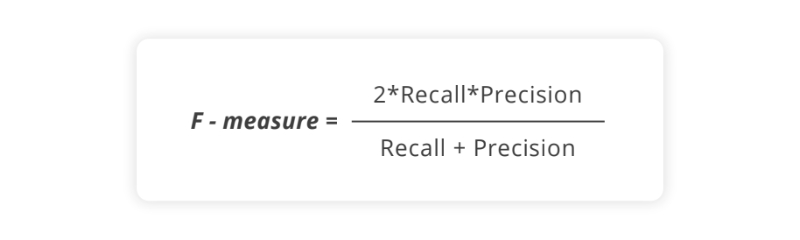

Lastly, if you are equally concerned about both of these conditions, then the metric which balances them is known as the F-measure or F1. F-measure takes data from the confusion matrix and uses the following formula:

Armed with this terminology you can have a much more effective discussion with an AI vendor and you now have the ability to compare apples with apples in the complex world of AI.

Important Elements – Bias and Sample Size

In addition to the three metrics described above there are two areas an executive needs to bring to the table when shopping for an AI system. These are the matters of bias and sample size.

Bias

AI models are only as good as the data from which they are built. It is all too easy for bias to creep in to AI models because finding sufficient amounts of quality data is time-consuming and expensive. For this reason, it is important for the executive to obtain statements from the vendor documenting that their system is not biased for or against any particular demographic group. This is particularly important in systems that use speech as a measurement.

It has been well documented that speech systems, because of convenience, may have been developed with the data that is most readily available to them. In the IT world, readily available data is often derived from white, well-educated, male engineers. The result is that the system is representative of their speech and therefore not well tuned to understand speech from people outside this demographic.

AI models should be built from data that represents the people who will be measured by the system.

Sample Size

If the AI developer is able to obtain quality data that will result in a demographic distribution which will prevent a biased system, there is still the challenge of having enough of this data. As mentioned, data is expensive. Executives involved in the procurement of AI systems should ask questions related to how many samples were used to build the models in the system. Further, executives should ask how frequently and how many times the models have been improved and over what period of time.

This speaks to the overall maturity of the AI system.

Conclusion

Communication requires understandability. Your business needs to know if your people can communicate effectively with your customers. Therefore, a measure of understandability is the tool you require. The tool used to measure understandability is very different than tools used to measure English language ability, particularly the academic variance used for college admissions.

Native speakers instantly recognize speech for its understandability. Within seconds the listener determines whether the speaker is understandable. Although this determination is made rapidly, it is the result of the very complex decision-making process in the brain. This is why traditional English language tests fail at the challenge of measuring understandability. Measuring understandability requires the use of artificial intelligence. This requires building artificial intelligence models based on the opinions of properly trained human raters. Artificial intelligence and machine learning allows us to transform human intelligence into machine intelligence. We train the machine to make the determination that the human would. The machine can then measure understandability.

Understanding the right questions to ask when purchasing an AI based measurement system is very important for the executive. Bringing the right terminology to the table is very important. Therefore, reviewing and discussing the confusion matrix, the systems precision, recall and F-measure are critical. Examining bias and sample size is equally important in understanding an AI system.

In closing, it is also important to understand that AI is constantly evolving. It is a relatively young science and its applications are growing around the world every day. This is all the more reason to make a concerted effort to see beyond the hype in order to make an informed procurement decision.